We are wanting to stream between 2 EVM boards. One to capture and encode the audio/video. The other to display it.

Today we were able to get this working pretty well -- for a while. The decoder side (client) would crash after a couple of seconds sometime and after minutes other times.

The error was

ERROR: from element

/GstPipeline:pipeline0/GstTIViddec2:tividdec20: fatal bit error

The Commands we used were.

Encoder/ Server

Normal

0

false

false

false

EN-US

X-NONE

X-NONE

gst-launch v4l2src

always-copy=FALSE !'video/x-raw-yuv,format=(fourcc)NV12,framerate=(fraction)30/1, width=(int)1280,

height=(int)720' ! TIVidenc1 engineName=codecServer codecName=h264enc

contiguousInputFrame=TRUE ! rtph264pay pt=96 config-interval=1 ! udpsink

host=175.14.0.209 port=5000 sync=false

Decoder/Client

gst-launch -v udpsrc port=5000

caps="application/x-rtp,media=(string)video, payload=96,

clock-rate=90000" ! rtph264depay ! typefind ! TIViddec2 codecName=h264dec

engineName=codecServer ! TIDmaiVideoSink useUserptrBufs=true numBufs=3

videoStd=720P_60 videoOutput=componentsync=false hideOSD=true

We start the Client first then the Server.After 5 seconds the video starts playing. After a couple minutes (random time) the decoder exits with an error

Output:

gst-launch -v udpsrc

port=5000 caps="application/x-rtp,media=(

string)video,

payload=96, clock-rate=90000" ! rtph264depay ! typefind ! TIViddec

2 codecName=h264dec

engineName=codecServer ! TIDmaiVideoSink useUserptrBufs=true

numBufs=3

videoStd=720P_60 videoOutput=component sync=false hideOSD=true

Setting pipeline to

PAUSED ...

/GstPipeline:pipeline0/GstUDPSrc:udpsrc0.GstPad:src:

caps = application/x-rtp, media=(string)video, payloa

d=(int)96,

clock-rate=(int)90000, encoding-name=(string)H264

Pipeline is live and

does not need PREROLL ...

Setting pipeline to

PLAYING ...

New clock:

GstSystemClock

/GstPipeline:pipeline0/GstRtpH264Depay:rtph264depay0.GstPad:src:

caps = video/x-h264

/GstPipeline:pipeline0/GstRtpH264Depay:rtph264depay0.GstPad:sink:

caps = application/x-rtp, media=(string)

video,

payload=(int)96, clock-rate=(int)90000, encoding-name=(string)H264

/GstPipeline:pipeline0/GstTypeFindElement:typefindelement0.GstPad:src:

caps = video/x-h264

/GstPipeline:pipeline0/GstTypeFindElement:typefindelement0.GstPad:sink:

caps = video/x-h264

/GstPipeline:pipeline0/GstTIViddec2:tividdec20.GstPad:sink:

caps = video/x-h264

/GstPipeline:pipeline0/GstTIViddec2:tividdec20.GstPad:src:

caps = video/x-raw-yuv, format=(fourcc)NV12, fr

amerate=(fraction)30000/1001,

width=(int)1280, height=(int)720

*************NOTE:

THIS IS WHERE IS STOPS WHEN IT HANGS….******************

/GstPipeline:pipeline0/GstTIDmaiVideoSink:tidmaivideosink0.GstPad:sink:

caps = video/x-raw-yuv, format=(fo

urcc)NV12,

framerate=(fraction)30000/1001, width=(int)1280, height=(int)720

davinci_v4l2

davinci_v4l2.1: Before finishing with S_FMT:

layer.pix_fmt.bytesperline

= 1280,

layer.pix_fmt.width

= 1280,

layer.pix_fmt.height

= 720,

layer.pix_fmt.sizeimage

=1382400

davinci_v4l2

davinci_v4l2.1: pixfmt->width = 1280,

layer->layer_info.config.line_length=

1280

*************NOTE:

Starts playing normal video

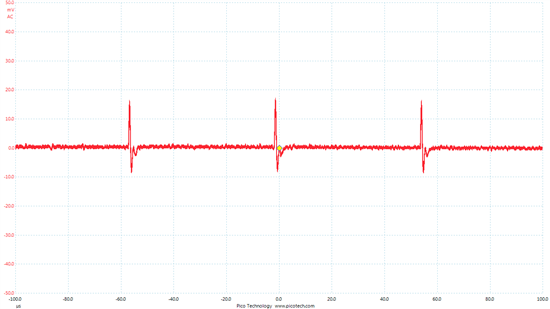

ERROR: from element

/GstPipeline:pipeline0/GstTIViddec2:tividdec20: fatal bit error

Additional debug

info:

gsttividdec2.c(1635):

gst_tividdec2_decode_thread (): /GstPipeline:pipeline0/GstTIViddec2:tividdec20

Execution ended

after 151664182917 ns.

Setting pipeline to

PAUSED ...

Setting pipeline to

READY ...

/GstPipeline:pipeline0/GstTIDmaiVideoSink:tidmaivideosink0.GstPad:sink:

caps = NULL

/GstPipeline:pipeline0/GstTIViddec2:tividdec20.GstPad:src:

caps = NULL

/GstPipeline:pipeline0/GstTIViddec2:tividdec20.GstPad:sink:

caps = NULL

/GstPipeline:pipeline0/GstTypeFindElement:typefindelement0.GstPad:src:

caps = NULL

/GstPipeline:pipeline0/GstTypeFindElement:typefindelement0.GstPad:sink:

caps = NULL

/GstPipeline:pipeline0/GstRtpH264Depay:rtph264depay0.GstPad:src:

caps = NULL

/GstPipeline:pipeline0/GstRtpH264Depay:rtph264depay0.GstPad:sink:

caps = NULL

/GstPipeline:pipeline0/GstUDPSrc:udpsrc0.GstPad:src:

caps = NULL

Setting pipeline to

NULL ...

Freeing pipeline ...

End of debug

What can be done to fix the fatal bit error?

Also how do we fix the problem where the the Client MUST be running before the client?

Thanks

Bill